You've probably interacted with a dozen different blobs before finishing your morning coffee. Every time you scroll through a Netflix thumbnail gallery or listen to a voice memo from your mom, you’re pulling bits of information from a massive, digital warehouse. In the tech world, we call this blob data.

It’s messy. It’s huge. It doesn’t fit into the neat little rows and columns of an Excel sheet or a traditional SQL database.

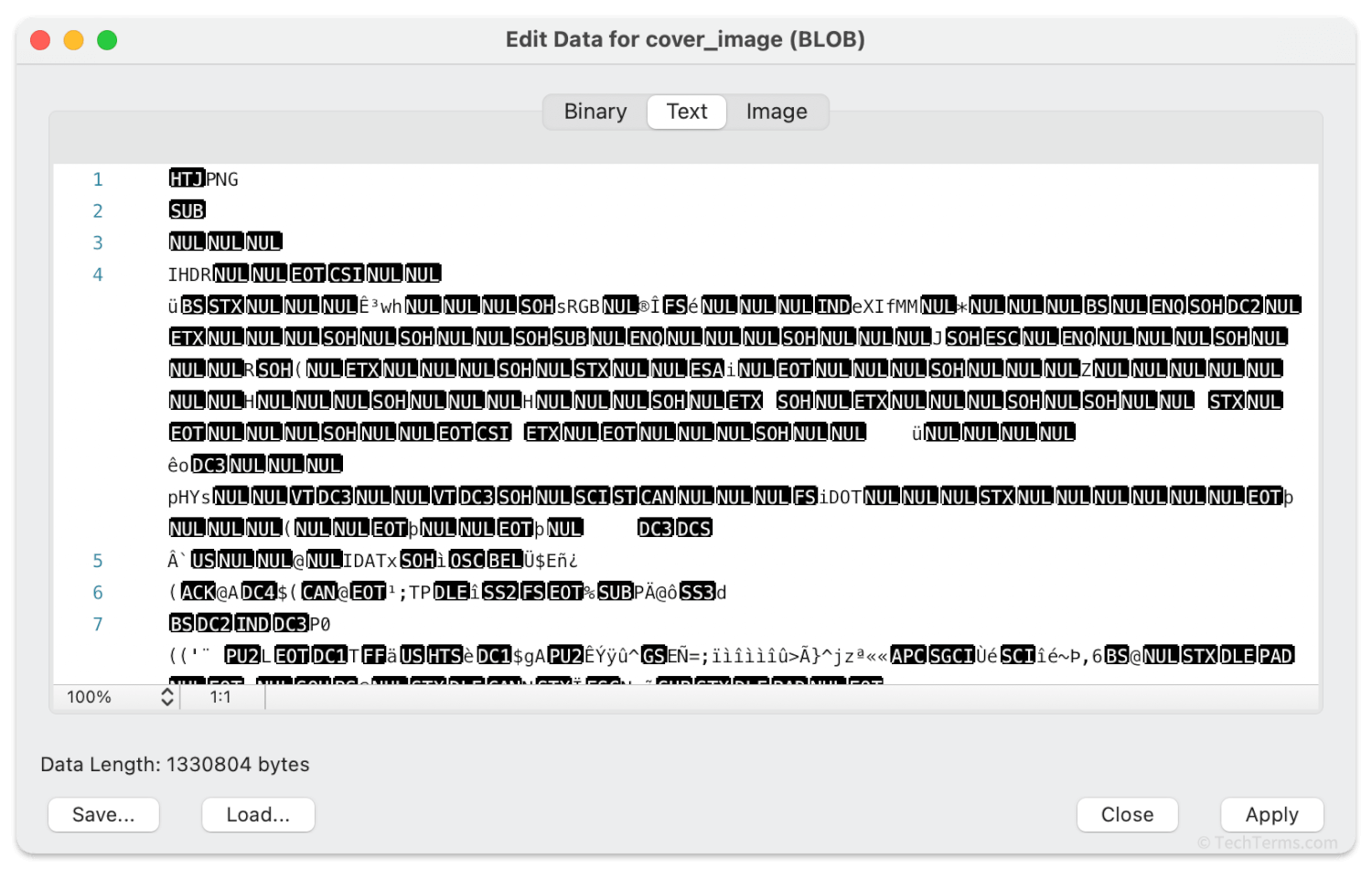

BLOB stands for Binary Large Object. It sounds like something out of a 1950s sci-fi flick, but it’s actually the backbone of the modern internet. Most people think data is just numbers and names. Honestly, that’s barely 20% of the world’s information. The rest? It’s the "stuff"—the images, the 4K video files, the raw sensor logs from a self-driving Tesla, and the audio snippets that Siri tries to understand.

What Blob Data Actually Is (And Isn't)

Think of a standard database like a very organized filing cabinet. Everything has a specific folder, a label, and a size limit. Now, imagine you have a giant, five-ton boulder that you need to store. It won't fit in the cabinet. You can't break it into pieces without ruining it. So, you throw it into a giant warehouse, slap a unique ID tag on it, and call it a day.

That boulder is your blob.

Technically, a blob is a collection of binary data stored as a single entity. Databases don't "read" what's inside a blob. To the database, it's just a giant pile of zeros and ones. It doesn't care if it's a JPEG of a cat or a firmware update for a Boeing 747. It just holds it.

💡 You might also like: Factors of x Squared: Why This Simple Concept Trips Everyone Up

There are usually three types you'll hear engineers argue about:

- Block Blobs: These are for files that are read from beginning to end, like a movie file or a document. They are made of "blocks" that can be uploaded individually to make things faster.

- Append Blobs: Think of these like a diary. You’re only ever adding new info to the end. These are perfect for logging data from a server.

- Page Blobs: These are more specialized. They allow for random read and write operations, often used for virtual hard drive disks (VHDs).

Why Can’t We Just Put It in a Regular Database?

You could. But it would be a disaster.

If you try to stuff a 2GB video file into a traditional relational database like MySQL or PostgreSQL, you’re going to experience what we call "performance degradation." Basically, the database gets sluggish. It wasn't built to move giant chunks of binary. It was built to compare strings and calculate sums. When you force it to handle blobs, the backups take forever, the indexing breaks, and your cloud bill starts looking like a phone number.

This is why companies like Amazon (S3), Microsoft (Azure Blob Storage), and Google (Cloud Storage) built dedicated "Object Storage" services.

They decoupled the metadata from the actual file. The "name" and "size" of the file might live in a fast database, but the actual "meat" of the file stays in the blob store. It’s cheaper. It’s faster. It’s infinitely scalable. You could store a petabyte of data in a blob store and it wouldn't blink. Try that with a standard SQL server and you'll be looking for a new job by Monday.

The Real-World Impact

Take a company like Spotify. They don't store millions of high-fidelity songs in a table. They use blob data storage. When you hit play, the app asks the database for the location of the song, and then it streams the blob directly to your device.

It's about efficiency.

The Cost Factor Nobody Tells You About

Storing blobs is cheap. Moving them is expensive.

If you're an architect setting up a system, you have to worry about "egress fees." Cloud providers are like hotels that let you check in for free but charge you $50 to use the elevator to leave. Storing a terabyte of raw image data might cost you $20 a month, but if a million people download those images, your bill will explode.

Then there are the "tiers."

- Hot Storage: For data you need right now (like your profile picture).

- Cool Storage: For stuff you might need once a month (like last month's invoices).

- Archive/Cold Storage: This is the digital equivalent of a basement. It's incredibly cheap, but it might take 15 hours to "rehydrate" (fetch) the data when you want it.

Experts like Corey Quinn often point out that the complexity of cloud billing usually stems from these data movement patterns. It's not just about what a blob is, it's about where it's going and how fast it needs to get there.

Security and the "Leaky Bucket" Problem

We have to talk about security because it's the biggest headache in the industry. You’ve probably seen news headlines about "Millions of Records Leaked Online." Nine times out of ten, that wasn't a sophisticated hack. It was a "leaky bucket"—a blob storage container that someone accidentally set to "Public."

Because blob storage is designed to be accessed via URLs (APIs), if you don't lock the door, anyone with the link can see the content.

Encryption is non-negotiable here. You need encryption at rest (while the data is sitting there) and encryption in transit (while it's moving). Most modern providers handle this by default now, but older legacy systems are still a bit of a minefield.

The Machine Learning Connection

If you’re into AI, you need to understand blob data because it is the fuel for every model ever built.

Large Language Models (LLMs) like GPT-4 weren't trained on tidy spreadsheets. They were trained on "unstructured data"—billions of blobs containing web pages, books, and code. Image generators like Midjourney were trained on billions of image blobs.

✨ Don't miss: Why the Live and Learn Tab Is Still the Best Way to Master Your New Gear

Without the ability to store and retrieve massive amounts of raw binary data cheaply, the "AI Revolution" literally couldn't exist. We would have run out of storage space and money back in 2012.

Practical Next Steps for Managing Blobs

If you’re actually looking to implement this or just trying to clean up a tech stack, don't just dump everything into a single container.

First, categorize by access frequency. If you have logs from three years ago that you only keep for legal reasons, move them to Archive storage immediately. You'll save about 90% on your storage costs.

Second, use a Content Delivery Network (CDN). If you are serving blobs (like images or videos) to users, don't let them pull directly from your blob store. Use something like Cloudflare or Akamai. It puts a copy of the blob closer to the user, making your site faster and reducing your cloud provider's egress fees.

Third, automate your lifecycle policies. You shouldn't be manually deleting files. Set a rule: "If this blob hasn't been touched in 30 days, move it to Cool storage. If it's been a year, delete it."

📖 Related: What's a Normal Size Photo? The Real Specs for Google Search and Discover

Blob data isn't just a technical term; it's the literal fabric of the internet. Understanding how to store it, move it, and secure it is the difference between a scalable application and a crashed server. Keep your metadata lean, your blobs remote, and for heaven's sake, check your permissions before you go live.

Actionable Insights for Implementation:

- Audit your current database for "Large Strings" or "Byte Arrays." If you have files larger than 1MB living inside a relational database, move them to an object store like AWS S3 or Azure Blob.

- Implement "Signed URLs" for security. This allows you to give a user temporary access (e.g., 5 minutes) to a private file without making the whole folder public.

- Review your "Egress" costs in your cloud billing dashboard. If "Data Transfer Out" is your highest cost, it's time to implement a CDN.

- Use naming conventions that include dates (e.g.,

/2024/05/01/image.jpg) to make lifecycle management rules easier to write.