You've probably seen the viral clips. A realistic cat wearing sunglasses cooks a five-course meal, or a futuristic city glows under a neon rainstorm. If you spend any time on social media, the comments are usually flooded with one specific question: Can ChatGPT create videos like this? Honestly, the answer is a bit of a "yes, but actually no" situation. It's confusing because OpenAI, the company behind ChatGPT, keeps moving the goalposts on what the tool can actually do.

People want a "magic button." They want to type "make me a movie about a space pirate" and hit enter. We aren't quite there yet, but we are a lot closer than we were even six months ago.

The Reality of ChatGPT and Video Generation

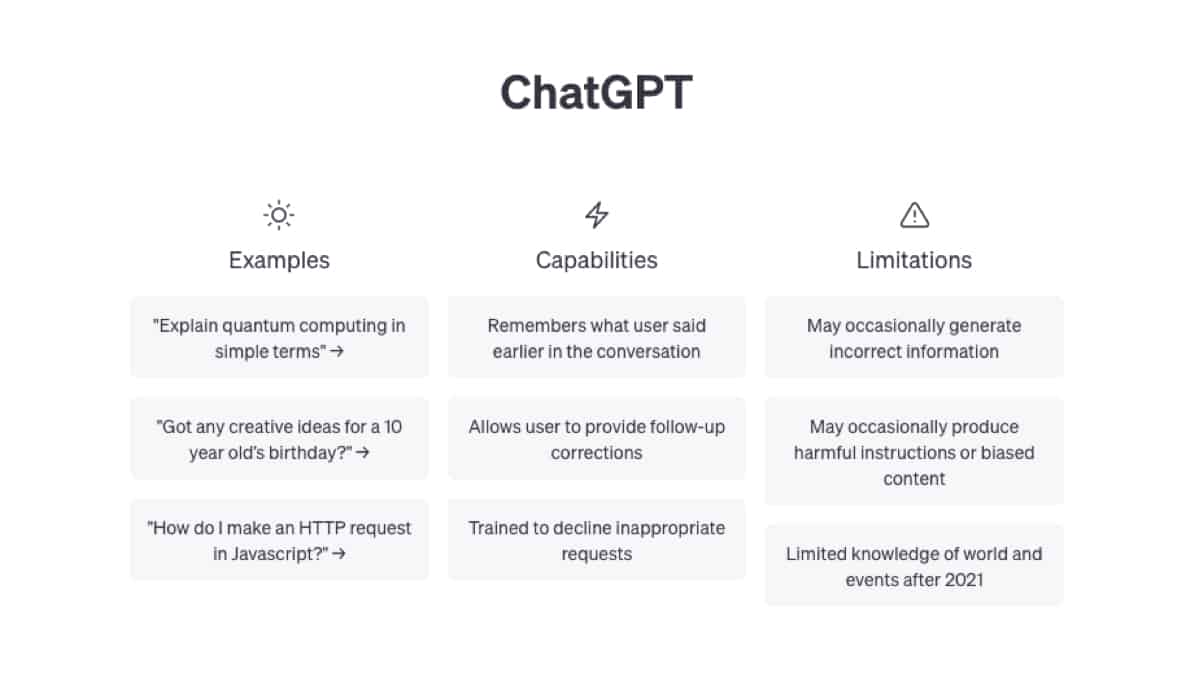

Let’s get the technical stuff out of the way first. ChatGPT itself is a Large Language Model (LLM). It’s a genius at words. It’s a decent coder. It’s a mediocre poet. But by itself, it doesn't "render" video files. If you open a basic GPT-4o window and ask for an MP4, it’s going to apologize to you.

However, the ecosystem has changed.

OpenAI announced Sora in early 2024, and that changed the conversation entirely. Sora is their dedicated text-to-video model. While it’s integrated into the broader OpenAI research umbrella, it isn't fully "inside" the ChatGPT interface for every casual user yet. Most of what you see online right now is either from a limited group of beta testers—think visual artists, filmmakers, and creative agencies—or it's not ChatGPT at all. Often, people are actually using tools like Runway Gen-3, Luma Dream Machine, or Kling AI and just calling it "ChatGPT" because that's the brand name everyone knows.

How it works right now

If you have a Plus subscription today, you can sort of make videos, but it’s a multi-step shuffle. You use ChatGPT to write a highly detailed prompt. Then, you use a GPT (a custom version of the bot) that connects to a third-party service like InVideo AI or HeyGen.

ChatGPT acts as the brain. It writes the script, plans the scenes, and then sends those instructions to a different engine that actually handles the pixels. It's like ChatGPT is the director and InVideo is the camera crew. You aren't getting a Hollywood blockbuster this way. Usually, you get a slideshow-style video with stock footage and an AI voiceover. It’s great for a quick YouTube Short or a corporate "how-to" video, but it lacks that cinematic soul people are hunting for.

Sora: The Game Changer We’re Still Waiting For

Everyone is obsessed with Sora because it actually understands physics. Or at least, it tries to. When you ask can ChatGPT create videos using the Sora model, you're talking about a system that understands that if a person bites a cookie, the cookie should have a bite mark in it afterward.

That sounds simple. It’s incredibly hard for AI.

Early video generators were basically "hallucinating" movement. They would create "liquid" people who grew extra limbs or disappeared into walls. Sora uses a transformer architecture—similar to how GPT-4 handles words—but it operates on "visual patches." It treats snippets of video like tokens in a sentence.

Why can't I use it yet?

OpenAI is being incredibly cautious. They're terrified of deepfakes and the potential for misinformation during major elections. They’ve been working with "red teamers"—experts who try to break the tool or make it produce harmful content—to build guardrails. This is why most of us are stuck watching demos instead of making our own clips.

There's also the "compute" problem. Generating a 60-second high-definition video takes a massive amount of processing power. If OpenAI opened the floodgates to 100 million users today, the servers would probably melt. They have to scale the infrastructure before "ChatGPT Video" becomes a standard feature for everyone.

Beyond OpenAI: The Real Competitors

If you're frustrated waiting for OpenAI to give you video powers, you should look at what's already out there. The market is moving faster than a caffeine-addicted squirrel.

- Runway (Gen-3 Alpha): This is arguably the leader for "cinematic" AI video right now. It allows for incredible control over camera movement. You can tell it to "pan left" or "zoom in" with shocking accuracy.

- Luma Dream Machine: This one went viral because it's free to try (up to a point) and it's fast. It’s fantastic at taking a still image and making it move realistically.

- Kling: A Chinese model that recently took the internet by storm because it can generate videos up to two minutes long with very high consistency.

- Pika Labs: Great for animation and specific "vibe" videos. They have a very cool feature where you can "lip-sync" a character to any audio file.

Honestly, if you need to make a video right now, these tools are actually better than ChatGPT's current public-facing options.

The "GPT-to-Video" Workflow

So, how do the pros do it? They don't just ask the bot for a video. They use a "modular" workflow. It's a bit of a grind, but it produces the best results.

First, you use ChatGPT to brainstorm the concept. You don't just say "make a video about a forest." You ask it to "write a cinematic screenplay for a 15-second sequence of a moss-covered ancient forest in the Pacific Northwest, shot on 35mm film, golden hour lighting."

Next, you take that specific prompt and plug it into a dedicated video generator. If the video needs a narrator, you take the script ChatGPT wrote and move it over to ElevenLabs, which is hands-down the best AI voice tool on the market. Finally, you stitch it all together in a traditional editor like CapCut or Premiere Pro.

It’s a Frankenstein's monster of tech. It works.

Limitations: What AI Video Still Can't Do

We need to be real here. AI video is currently in its "awkward teenage years." It’s impressive, but it’s also kind of a mess.

- Temporal Consistency: This is the big one. If a character is wearing a red hat in the first second of the video, that hat might turn blue or disappear by the fifth second. The AI "forgets" what happened a few frames ago.

- Complex Physics: Ask an AI to show someone pouring water into a glass. Half the time, the water goes through the glass or the glass turns into a bird. It doesn't truly understand "gravity" or "containment."

- Text Rendering: Just like early AI image generators struggled with fingers, video generators struggle with writing. If you want a sign in your video to say "Welcome Home," it will probably say something like "Wllcome Hmoe" in a demonic font.

- Duration: Most high-quality AI videos are capped at 5 to 10 seconds. Creating a full-length movie requires hundreds of these tiny clips to be manually edited together.

The Ethics and The Law

We can't talk about can ChatGPT create videos without mentioning the massive legal cloud hanging over the whole industry.

Where did the training data come from? OpenAI and its rivals generally claim "fair use," but filmmakers and artists are suing. They argue that these models were trained on copyrighted movies and YouTube videos without permission or compensation. If a court decides this training was illegal, the way we use these tools could change overnight.

There's also the "human element." If an AI can generate a perfect commercial in 30 seconds, what happens to the junior editors and stock footage researchers? It’s a valid fear. The counter-argument is that these tools will act as "force multipliers," allowing a single person to produce an entire series on a shoestring budget.

Actionable Steps for Using AI Video Today

If you want to start creating, don't wait for a "perfect" ChatGPT video button. Start building your toolkit now.

- Get a ChatGPT Plus or Team account: This gives you access to the DALL-E 3 image generator. Use it to create a "style reference" image.

- Use "Image-to-Video" instead of "Text-to-Video": Most video models perform 10x better if you give them a starting image. Generate a high-quality still in ChatGPT/DALL-E, then upload that still to Runway or Luma. It gives the AI a roadmap.

- Master the "Camera Prompt": When writing your prompts, use real cinematography terms. Mention "depth of field," "dolly zoom," "bokeh," or "handheld footage." The AI has been trained on film theory data, so it responds better to professional language than generic descriptions.

- Fix the audio separately: Never rely on a video generator's built-in sound. Use ChatGPT to write a script, ElevenLabs for the voice, and a site like Udio or Suno if you need a background music track.

- Upscale your results: AI video is often grainy or low-res. Use a tool like Topaz Video AI to sharpen the footage and make it look professional.

The "one-click" future is coming, but for now, the best "AI filmmakers" are actually just really good at managing five different tools at once. It’s a weird, exciting, and slightly terrifying time to be a creator. Just remember that the tool is only as good as the person prompting it. A boring idea will still be a boring video, even if a supercomputer renders it for you.

🔗 Read more: Shark Matrix Clean 2 in 1: What Most People Get Wrong About Robot Mops

Start small. Make a 5-second clip of something impossible. That's where the real fun begins.

Next Steps for You:

Check your OpenAI account settings to see if you have access to "Alpha" features, as they often roll out video-related experiments to small groups first. If you don't see anything, head over to Runway or Luma and try their "Image-to-Video" tool using an image you generated in ChatGPT. Comparing the results will show you exactly where the tech stands today.