You’ve finally captured it. That perfect sunset over the Amalfi Coast or the moment your kid actually smiled at the camera without making a weird face. Then you see it. A neon-orange trash can. Or maybe a photobomber in a "legalize it" t-shirt right behind your grandmother. It ruins the vibe. Naturally, you want to erase object from photo setups immediately. But if you’ve tried the one-tap tools built into your phone lately, you probably noticed something. They often leave a blurry, digital smear that looks worse than the trash can did. It’s like someone tried to heal a wound with a piece of Scotch tape and some Vaseline.

Editing isn't just about deleting pixels; it’s about what you put back in.

Most people think of "erasing" as a subtractive process. It’s actually additive. When you remove a person from a beach shot, the software has to guess what the sand, waves, and horizon looked like behind them. If the AI is "dumb," it just clones the patch of sand next to it. That’s how you get those repeating patterns that scream "this was photoshopped." We’ve reached a weird plateau in tech where the tools are everywhere, but the skill to use them convincingly is still kinda rare.

The move from Content-Aware Fill to Generative AI

Back in 2010, Adobe dropped Content-Aware Fill into Photoshop CS5. It felt like literal black magic. You’d lasso a stray dog in your landscape shot, hit a button, and poof—gone. Well, mostly. It worked by looking at the surrounding pixels and trying to "stitch" them into the hole. It was great for grass. It was terrible for complex architecture or faces. If you tried to remove a power line against a brick building, the bricks would end up looking like a glitch in the Matrix.

Fast forward to now. We’ve entered the era of Generative Fill and Magic Erasers.

The tech has shifted from "cloning" to "imagining." Google’s Magic Eraser on the Pixel series and Samsung’s Object Eraser don't just copy nearby colors. They use Diffusion models. These models have seen billions of images, so they know what the bottom of a brick wall should look like even if it’s hidden behind a parked car. But even this has limits. Have you ever tried to remove a person and the AI replaced them with a weirdly shaped rock that has human skin texture? It happens. A lot.

Why lighting is the silent killer of edits

Shadows are the snitches of the digital world. You can erase object from photo layers all day long, but if you forget the shadow that object cast on the ground, the "ghost" remains. Your brain might not consciously see the shadow, but it feels that something is "off."

📖 Related: Which Is The Largest Planet: What Most People Get Wrong

A common mistake is focusing purely on the object itself. Let’s say you’re removing a wedding guest from the background of a bridal portrait. You zap the guest. Cool. But that guest was blocking a light source, or casting a long shadow across the grass toward the bride. If you leave that shadow, the lighting in the photo no longer makes physical sense. Professional retouchers spend 20% of their time removing the object and 80% of their time fixing the light, grain, and texture around the "wound."

Tool Breakdown: What actually works in 2026

If you're serious about this, stop using the "one-tap" fix for everything. Different problems require different hammers.

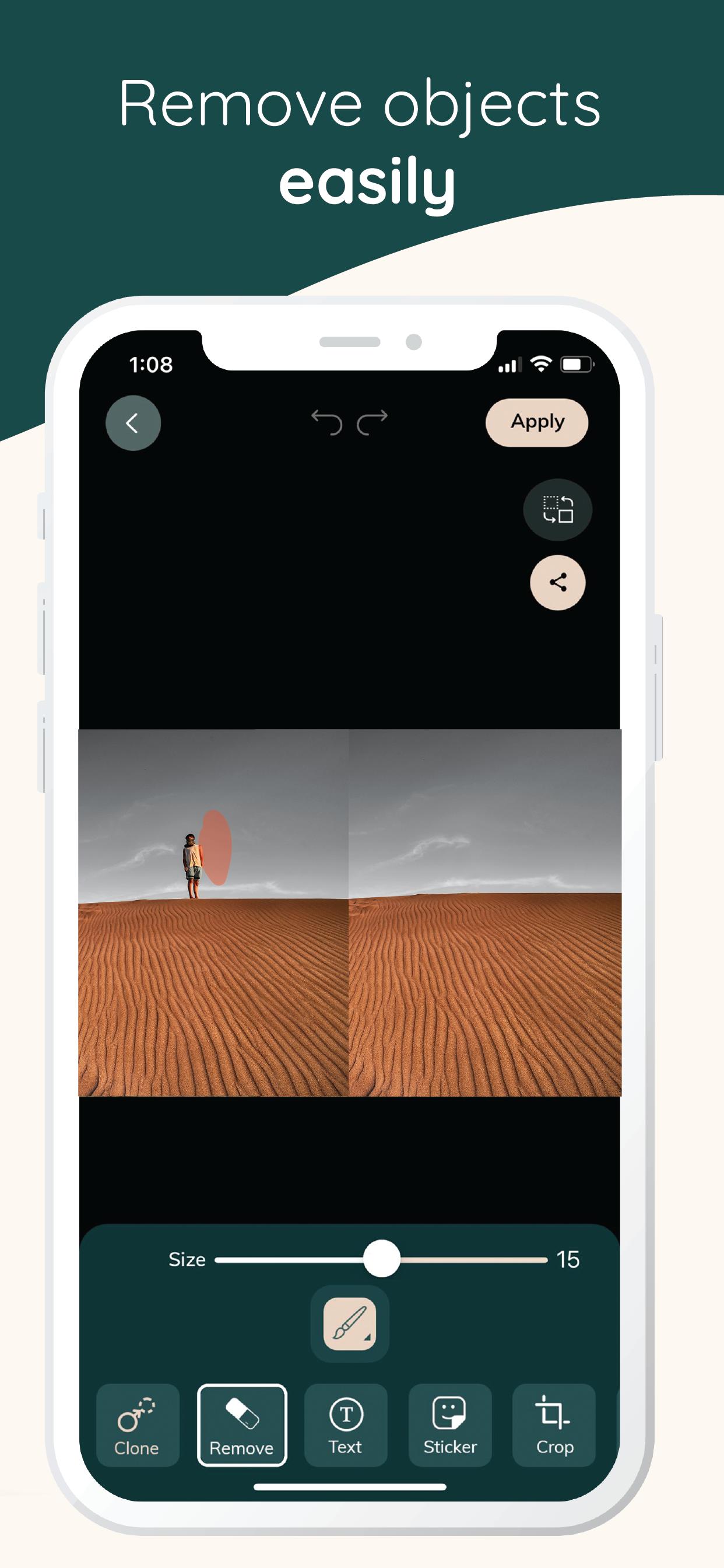

1. The Smartphone Native Tools

Google Photos and Apple Photos have integrated these features directly into the gallery. They are "good enough" for Instagram stories. If the background is simple—think blue sky or out-of-focus trees—use these. They are fast. They are free. But they struggle with "edge tension." That’s the crisp line where a person’s shoulder meets the background. If you see a halo or a glow around where the object used to be, the native tool failed.

2. Adobe Firefly and Photoshop

This is still the gold standard for a reason. Generative Fill allows you to prompt the software. Instead of just hitting "erase," you can tell it to "replace with a wooden park bench" or "fill with ocean waves." It maintains the focal length and grain of the original shot. It’s scary good, honestly.

3. Specialized AI Web Apps

Sites like Cleanup.pictures or Photoroom use heavy-duty server-side processing. Sometimes they outperform phone apps because they aren't limited by your phone's mobile processor. They use larger models that can handle more complex textures like fabric or wood grain.

The "Clone Stamp" is still your best friend

Sometimes AI is too smart for its own good. It tries to be creative when you just need it to be boring. If you’re trying to remove a tiny spot of dust on a lens or a small blemish, the old-school Clone Stamp tool is better than any AI. Why? Because it gives you 100% control. You pick the source, you pick the destination. AI is a guess; the Clone Stamp is a copy. If you’re working on a high-res print, don't let a machine guess what the skin texture should look like. Copy it from a clear patch of skin nearby.

Advanced Techniques: Dealing with "Sticky" Backgrounds

What happens when the object you want to remove is behind something else? Like a fence? This is the nightmare scenario. To erase object from photo files with depth, you have to work in layers.

💡 You might also like: Stoneridge Mall Apple Store: What Most People Get Wrong

Most people just try to rub out the object and hope the fence stays intact. It won't. The AI will eat the fence. The pro move here is to mask the foreground (the fence) first. You basically tell the computer: "Don't touch this part." Then you edit the layer behind it. It’s tedious. It’s slow. But it’s the only way to avoid that "melted plastic" look that ruins professional photography.

The "Plate" Method

If you're a real nerd about this, you can use the "clean plate" technique. If you're taking a photo in a crowded place, take five photos from the exact same spot over the course of a minute. People move; the buildings don't. Later, you can stack these photos. Since the people moved in every shot, the software can look through the "holes" and see the static background behind them. You don't even have to "erase" anything; you just mask in the parts of the background that were clear in the other shots.

Common Myths about Object Removal

- "It's just one click now." No. For a high-quality result that can be printed on a canvas, it almost never is. You usually need to go back in and manually add "noise" or "grain" to the edited area. Modern digital cameras have a specific pattern of sensor noise. AI-generated fills are often "too smooth," which makes them stand out like a sore thumb.

- "Resolution doesn't matter." It matters more than ever. If you try to remove an object from a low-res WhatsApp photo, the AI doesn't have enough data to reconstruct the texture. You’ll get a blurry mess. Always start with the RAW file or the highest-resolution JPEG you have.

- "You can't tell it was edited." If you know where to look, you can almost always tell. Look for "edge artifacts" or lighting inconsistencies. The goal isn't to make it perfect; it's to make it "invisible" to the casual observer.

Ethics: When should you stop?

We have to talk about the "uncanny valley." There’s a point where a photo stops being a memory and starts being a composite. Removing a power line is one thing. Removing your "annoying" cousin from a family reunion photo is another. There’s a growing debate in the photography world about the "integrity of the frame." If you change the scene too much, is it still a photograph? Or is it a digital illustration? Honestly, that's up to you, but keep in mind that the more you remove, the more likely the photo is to feel "hollow."

Actionable Steps for Your Next Edit

Don't just jump in and start scrubbing with a brush. That’s how you get bad results. Follow this workflow instead to get a clean finish.

- Duplicate your layer. Never, ever edit on the original "Background" layer. If you mess up, you need to be able to go back.

- Zoom out first. Before you get into the pixels, look at the whole composition. Does the object actually need to go? Sometimes a "distraction" actually provides balance or scale.

- Use a soft brush. Whether you're using a phone or a PC, keep your brush edges soft. Hard edges create visible seams. You want the new pixels to "bleed" into the old ones.

- Work in small strokes. Don't try to erase a whole car in one go. Do the tires. Then the door. Then the windows. This gives the AI more "context" to work with at each step.

- Match the grain. After the object is gone, if the area looks too smooth, use a "Grain" or "Add Noise" filter. Set it to a tiny amount—maybe 1% or 2%. It sounds crazy, but adding "dirt" back into the photo makes the edit look more real.

- Check the shadows. Look at where the sun is coming from. If you removed a person, follow the angle of other shadows in the photo. If there’s a stray shadow left on the ground, use a low-opacity clone stamp to blend it away into the surrounding pavement or grass.

The tech is getting better every day, but it still lacks a human eye for "vibes." You have to be the judge of whether the edit looks natural or like a science experiment gone wrong. If you find yourself spending more than 20 minutes trying to fix one spot, it might be time to just crop the photo or accept that the orange trash can is now part of your family history. Sometimes, the flaws are what make the memory feel real. But for those times when you just need a clean shot, take it slow and remember that the space behind the object is the most important part of the picture.