You’ve been there. You are scrolling through a random blog or maybe a social feed, and you see a pair of sneakers or a weird-looking plant that you just have to identify. You take a screenshot. Then what? Most people think they know how Google images search by image upload works, but honestly, the tool has changed so much since the old "drag and drop" days that it’s almost a different beast entirely. It’s not just about finding where a photo came from anymore.

It’s about context.

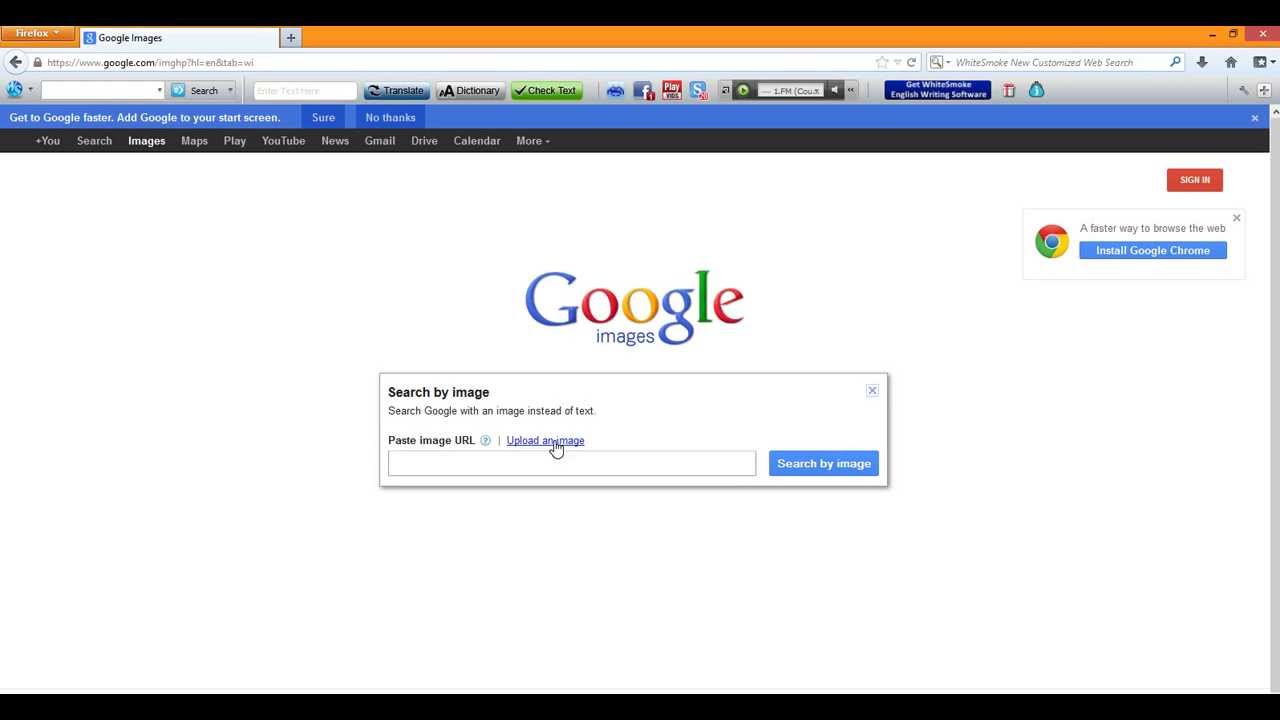

Back in the day, you’d go to the desktop site, hit that little camera icon, and hope for the best. Now, Google has baked this tech into almost every corner of your phone and browser. They call it Lens mostly, but the core engine—the "upload and find" magic—is what powers the whole ecosystem. If you’re still just using it to find a high-res version of a wallpaper, you’re barely scratching the surface of what the algorithm actually sees when it looks at your pixels.

The Shift from Exact Matches to Semantic Understanding

When you use Google images search by image upload today, the AI isn't just looking for identical pixel clusters. That’s the old way. The new way involves computer vision that understands "entities."

Let’s say you upload a photo of a messy desk. The old Google would look for other photos of messy desks. The current version identifies the specific Logitech keyboard, the half-eaten Granny Smith apple, and the Dell monitor in the background. It breaks the image down into pieces. It’s sort of like how we read a sentence by looking at individual words.

This is why, sometimes, you’ll upload a photo and Google will give you results that don't look exactly like your image but are related in theme or product category. It’s trying to be helpful, though it can be annoying if you’re just trying to track down a specific artist. You’ve probably noticed that "Search by Image" often defaults to "Google Lens" now. That’s because Lens is designed to be proactive. It wants to sell you the shoes in the photo or translate the text on the sign you photographed.

Why the Mobile Experience is Actually Better

For years, desktop was king for deep research. Not anymore. On mobile, Google images search by image upload is basically a superpower integrated into the Google App and Chrome. You don’t even have to download the file. You just long-press.

📖 Related: Why Naming the Mary W. Jackson NASA Headquarters Actually Matters

If you are on an Android, "Circle to Search" is the latest evolution. It’s the same backend technology, just with a more frictionless UI. You hold the home button, circle the thing, and boom—results. It’s fast. Almost too fast. It makes the old way of saving a file, navigating to a website, and clicking "Upload" feel like using a rotary phone.

How to Actually Get Clean Results (The Expert Way)

Most people get frustrated because they get too many "shopping" results. If you’re trying to find the original source of a meme or an old historical photo, those "Buy Now" links are useless. To bypass this, you need to use the "Find image source" button that often appears at the top of the Lens interface.

It’s a tiny bit hidden.

Google wants you to engage with the visual search results because those are monetizable. But for journalists, researchers, or anyone worried about deepfakes, finding the original upload is the goal. When you upload your file, look for the specific prompt to "find image source." This triggers a more traditional index search that prioritizes the earliest known instance of that file on the web.

Also, don't sleep on the "Text" and "Translate" tabs. If you upload a screenshot of a technical manual in German, Google doesn’t just find the manual; it overlays the translation right on the image. It’s spooky how good it is.

The Privacy Elephant in the Room

We have to talk about what happens to your data. When you use Google images search by image upload, you aren't just sending a file into a void. You are training the model. Google’s Privacy Policy is pretty clear that they use your interactions to improve their services.

💡 You might also like: Why Do My iPhone Keep Turning Off: What’s Actually Happening to Your Battery

While they aren't necessarily showing your private family photos to the world, the metadata and the visual content are being processed. This is a big reason why some people prefer tools like TinEye or Yandex for more "anonymous" reverse searches. TinEye, for instance, doesn't "save" your images in the same way for product development. They focus on the index. Google focuses on the understanding.

Common Failures and How to Fix Them

Sometimes the upload just... fails. Or it gives you something completely unrelated. Usually, this happens for one of three reasons:

- Low Contrast: If the subject of your photo blends into the background, the AI gets confused. Try cropping the image before you upload it so the subject is front and center.

- Too Much Noise: If there are five different objects in the frame, Google might focus on the wrong one. Again, the "Crop" tool within the search interface is your best friend here.

- The "Newness" Factor: If you took a photo of something that literally happened five minutes ago, it won't be in the index. Google images search by image upload isn't a live satellite feed. It’s an index of what has already been crawled.

The Weird World of AI-Generated Images

In 2026, we are seeing a massive influx of AI-generated content. This has made Google images search by image upload way more complicated. If you upload a Midjourney-style image, Google will often find thousands of "similar" AI images, making it nearly impossible to find the original creator.

Google is trying to combat this with "About this image" metadata, which attempts to show you if an image has been manipulated or generated. But it’s an arms race. The tech is barely keeping up with the creators.

Pro Tip: Using Chrome DevTools for Hard-to-Reach Images

Ever tried to reverse search an image on a site that blocks right-clicking? It’s a pain. Most people just take a screenshot, but that loses quality. Instead, you can use the "Inspect" tool in your browser (F12) to find the direct URL of the image in the "Sources" or "Network" tab. Once you have the direct link, you can just paste it into the Google search bar.

This gets you the highest resolution possible for your search. Higher resolution equals better matches. It’s a simple trick that separates the casual users from the power users.

Beyond Just "Finding" Stuff

The real value of Google images search by image upload in a professional setting is verification. If you’re a recruiter, you can check if a candidate’s profile picture is a stock photo. If you’re a real estate agent, you can see if a "new" listing has been posted on other sites for months.

It’s a truth-testing machine.

Think about the way disinformation spreads. A photo from a 2014 protest gets recirculated as if it happened yesterday. One quick upload reveals the truth. It shows the date the image first appeared. It shows the original context. In an era where "seeing is believing" is no longer true, this tool is one of the few ways to verify reality.

The Future: Multi-Search

Google is currently pushing something called "Multisearch." This is the logical conclusion of the upload feature. You upload a photo of a dining set, and then you type "green" into the search bar. Google then finds that specific dining set, but only in green.

✨ Don't miss: Snapchat Best Friends List: How It Actually Works and Why Yours Keeps Changing

It’s combining visual data with text queries. This is where the technology is heading—a world where you don't have to describe things with words because you can just show the computer what you mean.

Actionable Steps for Better Image Searching

Stop just clicking and hoping. If you want to master this, change your workflow starting today.

First, get the Google App on your phone. It’s significantly more powerful for visual tasks than the mobile browser version. Second, if you’re on desktop, use the "Search Image with Google" right-click menu rather than navigating to the homepage; it preserves the original context of the image.

When you get your results, don't just look at the first three links. Scroll down to the "Visual Matches" section to see how the AI is categorizing your subject. If it thinks your cat is a "Maine Coon" but it's actually a "Persian," you know the search is off-track and you need a clearer photo.

Finally, always check the "About this image" section if it's available. It tells you when the image was first indexed by Google. This is the single most important piece of data for spotting fakes or old news being rebranded as new.

Reverse searching isn't just a tech trick. It’s a literacy skill. The more you use it, the more you realize how much of the internet is just a series of copies. Using Google images search by image upload allows you to find the original in a sea of duplicates.

Open a new tab. Take a photo you’ve been curious about. Upload it. See what the "brain" actually thinks it’s looking at. You’ll be surprised how often your own eyes were missing the details the AI catches instantly. Use the cropping tool to isolate specific parts of the image and watch the results change in real-time. This is how you refine the search. This is how you find the unfindable.