Scraping eBay is a nightmare. Honestly, if you’ve ever tried to pull more than fifty product listings using a basic Python script, you probably hit a wall—or a CAPTCHA—faster than you could say "Data Extraction." It’s frustrating because the data is right there. You want the price history of a 1998 Charizard card or the average sell-through rate for refurbished Dell laptops, but eBay’s anti-bot defenses, managed by companies like Akamai, are built to keep you out.

They have to. If they let every price-comparison bot crawl their site freely, their servers would melt. But for a developer or a data scientist, knowing how to scrape eBay is a superpower. It’s the difference between guessing a market price and owning the market.

Why eBay is a Moving Target for Scrapers

Most people think scraping is just about finding a CSS selector and hitting "save." It isn't. Not anymore. eBay uses a complex mix of server-side rendering and client-side JavaScript. If you look at the source code of a search results page, you'll see a mess of JSON objects tucked inside <script> tags. This is actually a blessing. Instead of parsing messy HTML, you can often find the "View Item" data structured neatly if you know where to look.

But there’s a catch. eBay’s layout changes. They run A/B tests constantly. One day your selector is .s-item__price, and the next day, the class name has a random string appended to it to break your bot.

And then there are the headers. If your request doesn't include a realistic User-Agent or the correct Accept-Language header, eBay knows you’re a script. Real humans don't send requests with "python-requests/2.28.1" headers. They send headers that look like a Chrome browser running on Windows 11 with a specific screen resolution.

The Problem with the Official API

You might think, "Why not just use the eBay Developers Program?" It's a fair question. The eBay Browse API and Finding API are legitimate tools. But they are restrictive. There are call limits. There’s a vetting process. And frankly, the data you get through the API isn't always as "raw" as what you see on the live site. If you’re doing deep market research, you want the actual live listings, not a filtered API response that might lag behind by several minutes or hide certain "sponsored" attributes.

The Technical Setup: Tools That Actually Work

Forget Selenium for large-scale scraping. It's too slow. It's a resource hog. If you're trying to scrape thousands of pages, spinning up a full headless browser for every request will kill your RAM.

Instead, look at Playwright or Puppeteer. They’re faster, more stable, and have better "stealth" plugins. The playwright-extra package, for instance, includes a stealth plugin that helps bypass basic bot detection by mimicking human-like browser fingerprints.

But the real secret isn't the library. It's the proxy.

Residential vs. Datacenter Proxies

If you use a cheap datacenter proxy from a well-known provider, you'll get blocked. eBay sees a massive influx of traffic from an AWS or DigitalOcean IP address and shuts it down instantly. You need residential proxies. These are IP addresses assigned to real homes. They're harder to detect because, to eBay, you just look like a guy in Ohio browsing for power tools.

Don't ignore the "Time to First Byte" (TTFB). If your proxy is slow, your scraper will hang, and eBay’s server might timeout the connection, leading to incomplete data.

Bypassing the Infamous "Robot Check"

You've seen it. The "Please verify you're a human" screen. This happens when your "velocity" is too high. If you're hitting eBay’s servers 100 times a second, you're toast.

- Rotate your User-Agents: Don't just use one. Use a list of 500.

- Randomize your delays: Don't scrape every 5 seconds. Scrape at 4.2 seconds, then 7.8, then 3.1. Make it look messy. Humans are messy.

- Handle CAPTCHAs: Sometimes, despite your best efforts, the CAPTCHA appears. You can integrate services like 2Capcha or Anti-Captcha into your script. These services send the image to a human who solves it and sends the token back to your bot. It’s a bit of an "ugly" solution, but it works.

Parsing the Data: The Meat of the Operation

Once you have the HTML, you need to extract the goods. Most eBay scrapers focus on the "Sold" listings. Why? Because the asking price is a fantasy. The sold price is reality.

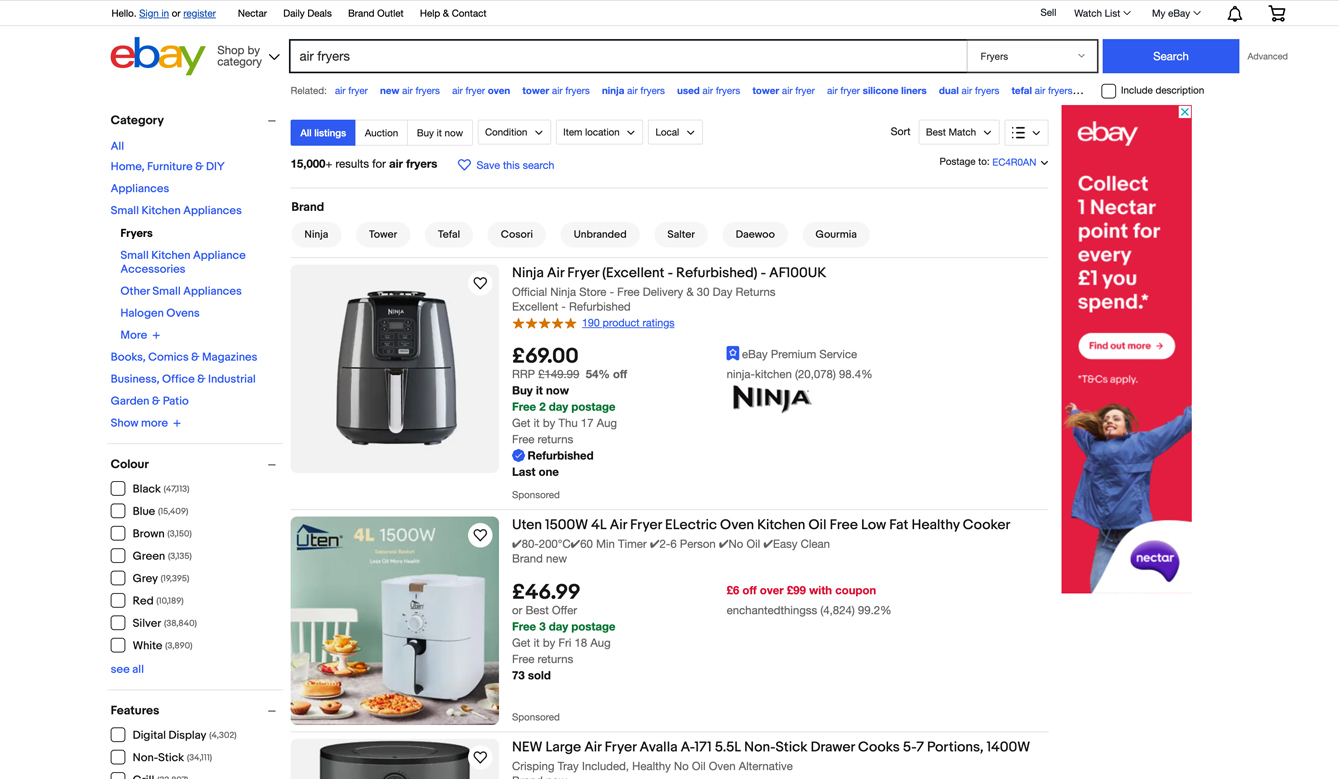

To find sold items, you usually append &LH_Sold=1&LH_Complete=1 to the search URL. Inside the HTML, the item containers usually follow a predictable structure. You'll find the title in an <h3> tag with a class like s-item__title. The price is usually in a <span> with the class s-item__price.

Wait. There's a hidden trap.

eBay often includes "Results from matching categories" or "People also viewed" sections. If your CSS selectors are too broad, your CSV file will be full of junk data that has nothing to do with your search. You have to scope your selectors to the #mainContent or the specific <ul> that holds the search results.

A Quick Python Snippet (Conceptual)

import requests

from bs4 import BeautifulSoup

# This is a bare-bones example. In the real world, you'd need

# a proxy rotater and sophisticated headers.

def get_ebay_price(item_name):

url = f"https://www.ebay.com/sch/i.html?_nkw={item_name}&LH_Sold=1"

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36"

}

response = requests.get(url, headers=headers)

if response.status_code != 200:

return "Blocked!"

soup = BeautifulSoup(response.text, 'html.parser')

items = soup.find_all('div', class_='s-item__info')

for item in items:

title = item.find('div', class_='s-item__title')

price = item.find('span', class_='s-item__price')

if title and price:

print(f"{title.text.strip()} sold for {price.text.strip()}")

# Use with caution.

The Legal and Ethical Gray Area

Let's talk about the elephant in the room. Is how to scrape eBay legal?

In the United States, the landmark case hiQ Labs v. LinkedIn suggested that scraping publicly available data doesn't violate the Computer Fraud and Abuse Act (CFAA). However, eBay's Terms of Service explicitly forbid it.

If you scrape too aggressively, they can’t exactly put you in jail, but they can—and will—sue if you’re a commercial entity causing "systemic harm" to their infrastructure. Don't be a jerk. Don't scrape every second. Don't resell the data in a way that directly competes with eBay’s own core business. Respect robots.txt where you can, though we all know eBay's robots.txt is basically a "Keep Out" sign written in crayon.

Dealing with Dynamic Content

Modern eBay pages use a lot of "Lazy Loading." This means the images and sometimes even the prices don't actually exist in the HTML until you scroll down.

If you’re using a simple requests library, you’ll miss this. This is why tools like Puppeteer are essential. You can script the bot to "scroll to bottom," wait two seconds for the DOM to update, and then grab the content. It takes longer, but the data quality is 100% better.

Scaling Up: The Distributed Approach

If you need to scrape 100,000 items, you can't do it from your laptop. You need a distributed system.

Usually, this involves a queue system like Redis. You push all the URLs you want to scrape into the queue. Then, you have multiple "worker" nodes (small VPS instances) that pull a URL, scrape it using a proxy, and push the result into a database like MongoDB or PostgreSQL.

This prevents a single failure from killing your entire project. If one worker gets blocked, the others keep humming along.

Turning Raw Data into Insights

Scraping is just the first step. The real magic happens in the cleaning. eBay data is noisy. Sellers use "keyword stuffing" in titles. You'll see "iPhone 15 CASE ONLY NO PHONE" in your results. If you're not careful, your average price calculation will be skewed by $20 plastic cases when you’re looking for $800 phones.

You need to implement filters.

- Price Floor: Ignore anything below a certain dollar amount.

- Keyword Blacklist: Filter out "box only," "damaged," "broken," or "parts."

- Outlier Detection: Use a simple Z-score to remove prices that are three standard deviations away from the mean.

The Future of Scraping in 2026

We're seeing a massive shift toward AI-powered scraping. Instead of writing CSS selectors, developers are using LLMs to "look" at a page and extract data points. While this is computationally expensive, it's incredibly resilient. If eBay changes its class names, the AI doesn't care. It knows what a "price" looks like regardless of the HTML tag.

👉 See also: 2025 Audi RS e-tron GT: Why the Performance Plus Might Be Too Much

But for now, the most cost-effective way to handle how to scrape eBay remains a well-tuned, stealthy script combined with high-quality residential proxies.

Actionable Next Steps for Success

- Audit your headers: Go to a site like "WhatIsMyBrowser" and copy your exact headers. Use those in your script.

- Start small: Scrape one page every 30 seconds. Prove the logic works before you try to scale.

- Invest in Proxies: Don't waste time with "free" proxies. They are compromised and slow. Bright Data or Oxylabs are the industry standards for a reason.

- Validate your data: Periodically check your scraped prices against a manual search to ensure your script isn't grabbing "Sponsored" or "Recommended" items by mistake.

Building a robust scraper takes patience. You will get blocked. You will have to rewrite your selectors. But once you have a steady stream of eBay data flowing into your database, you have a competitive edge that most people will never bother to build.