Honestly, if you've ever tried to train a reinforcement learning agent, you know it's usually a nightmare. One minute your agent is learning to walk, and the next, it’s twitching on the floor because a single bad gradient update blew up the entire brain of the model. It's frustrating.

For years, researchers at places like OpenAI and DeepMind struggled with this "catastrophic forgetting" or "policy collapse." Then came PPO reinforcement learning, or Proximal Policy Optimization.

It changed everything.

Introduced by John Schulman and his team in 2017, PPO became the "Goldilocks" of algorithms. It wasn't as unstable as the old-school Vanilla Policy Gradient, and it wasn't as head-splittingly complex as TRPO (Trust Region Policy Optimization). It was just right.

Today, PPO is basically the engine under the hood for most of the AI you actually care about. If you’ve talked to a large language model recently, there’s a massive chance PPO was used during the RLHF (Reinforcement Learning from Human Feedback) phase to stop the AI from being a jerk.

The Secret Sauce: Why PPO Doesn't "Break"

The core problem with Reinforcement Learning (RL) is that the data the agent learns from is "non-stationary."

✨ Don't miss: What a T. rex Sounded Like: Why the Hollywood Roar is a Lie

Basically, as the agent learns, it changes its behavior. As it changes its behavior, it sees new parts of the environment. If it sees something it doesn't like and overreacts, it might change its internal logic so drastically that it "forgets" how to do the basic stuff it already mastered.

PPO stops this by being incredibly cautious.

The Clipping Mechanism

Instead of letting the model make a massive leap toward a new strategy, PPO uses a clipped surrogate objective.

Think of it like a rubber band. You can pull the policy toward a better reward, but if you pull too far, the "clip" kicks in and says, "Whoa there, let’s not get crazy."

Technically, it tracks the ratio between the new policy and the old policy. If that ratio moves too far away from 1 (usually beyond 0.2 or 20%), the algorithm flat-out ignores the extra gain. It removes the incentive to move outside that safe zone.

This is why PPO is so stable. It forces the model to take "proximal" steps—steps that are close to what it already knows.

👉 See also: How to Master "Drag Each Label to the Appropriate Target" Questions Without Losing Your Mind

PPO vs. Everything Else

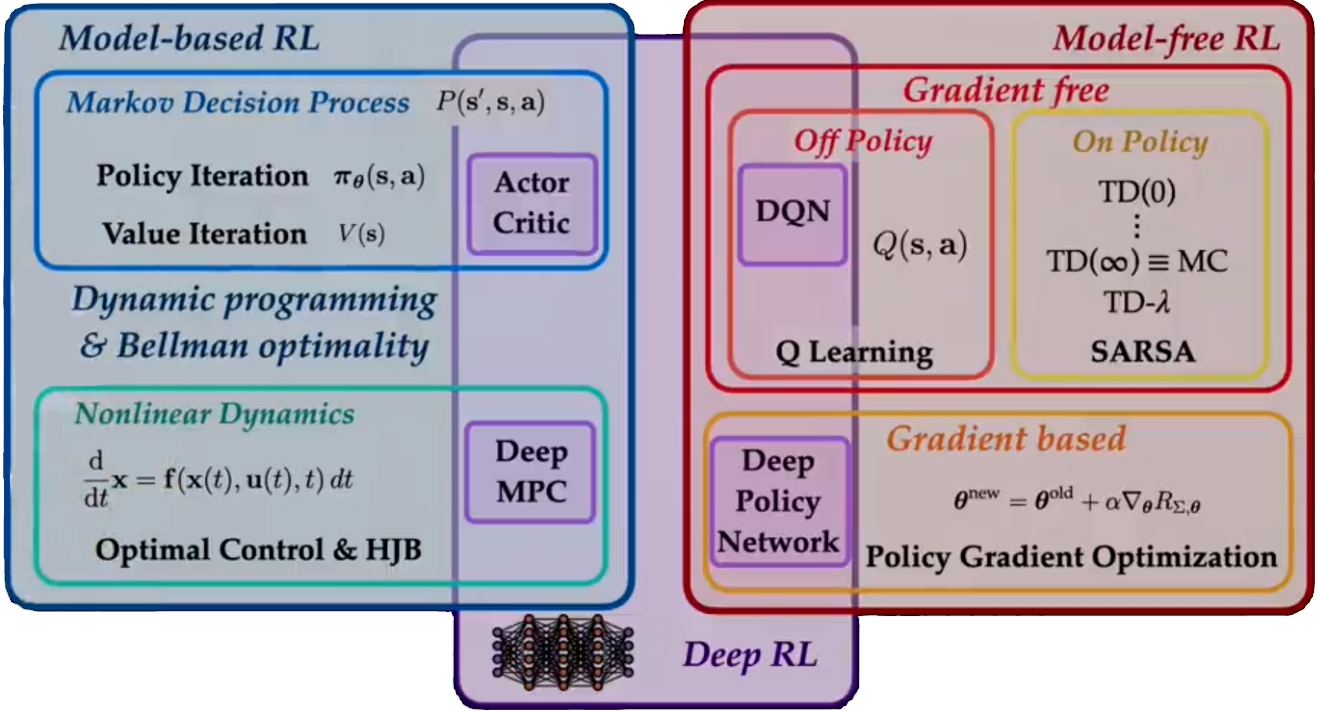

You've probably heard of DQN (Deep Q-Networks) or A3C. Why do people choose PPO over them?

- DQN is great for discrete stuff, like pressing buttons in an Atari game. But it struggles with continuous movement, like a robot arm rotating or a car steering. PPO handles both easily.

- TRPO was the predecessor. It used a ton of heavy math (second-order optimization and Kronecker-factored approximations) that made it slow and a literal pain to code. PPO gets the same results using simple first-order math.

- Vanilla Policy Gradient is like a wild horse. It’s fast, but it’ll throw you off a cliff the moment it sees a shiny object.

In the real world, "simple and stable" wins every time. Developers would rather have an algorithm that works 95% of the time without constant babysitting than a "perfect" algorithm that requires a PhD to tune every Tuesday.

Where PPO Lives in 2026

It’s not just for playing Hide and Seek in digital sandboxes anymore.

Large Language Models (LLMs)

When you hear about "aligning" an AI to be helpful and harmless, that’s often PPO at work. After a model is pre-trained on the internet, humans rank its answers. A reward model is built from those rankings, and then PPO is used to nudge the LLM toward the high-scoring answers.

Robotics

Teaching a robot to pick up a strawberry without crushing it is hard. You can't write a "if-then" statement for every possible finger pressure. PPO allows the robot to "feel" its way through millions of simulations until it finds the sweet spot of pressure.

Finance

Trading bots use it to navigate the mess of the stock market. Because the market is constantly shifting, the stability of PPO is a huge asset. It prevents the bot from seeing a single "flash crash" and deciding to sell everything it owns in a panic.

What Most People Get Wrong

A common misconception is that PPO is "sample efficient."

👉 See also: Why Translation English to Farsi Always Trips People Up

It's actually not.

Compared to "off-policy" models like Soft Actor-Critic (SAC), PPO is a bit of a data hog. It needs to see a lot of examples because it throws away old data after it uses it.

However, because PPO is so easy to parallelize—meaning you can run 1,000 versions of the environment at once—the "inefficiency" doesn't really matter in practice. You just throw more compute at it.

Getting Started: Actionable Steps

If you're looking to actually implement this, don't write it from scratch unless you're doing it for a grade.

- Use Stable Baselines3: This is the industry-standard library for PyTorch. Their PPO implementation is rock solid and well-documented.

- Watch Your Entropy: If your agent stops exploring too early, increase the entropy coefficient. It's the "curiosity" dial of the algorithm.

- Normalize Your Inputs: PPO is sensitive to the scale of your data. If your rewards are in the millions but your observations are between 0 and 1, the math will get wonky. Normalize everything.

- Start Simple: Try the "CartPole" or "LunarLander" environments in Gymnasium first. If you can't get PPO to land a 2D rocket, you definitely won't get it to solve a 3D robot.

The beauty of PPO is its reliability. It's the "boring" choice in the best possible way. While other researchers are chasing the newest, flashiest papers, the people actually building products are usually just sticking with PPO because it just works.

To dive deeper, start by setting up a basic Gymnasium environment and running the PPO agent from Stable Baselines3 to see how it handles a basic balancing task.

Expert Insight: Watch out for the "KL Divergence" in your logs. If it spikes, your clipping isn't doing its job, and your model is about to "collapse." Keep that number small and steady for a successful run.