You’ve seen it everywhere. Netflix shows you the "Top 10 in the US Today." Twitter (X) shoves trending hashtags down your throat. Amazon tells you exactly which ergonomic mouse is flying off the shelves this second.

It looks easy. It isn't.

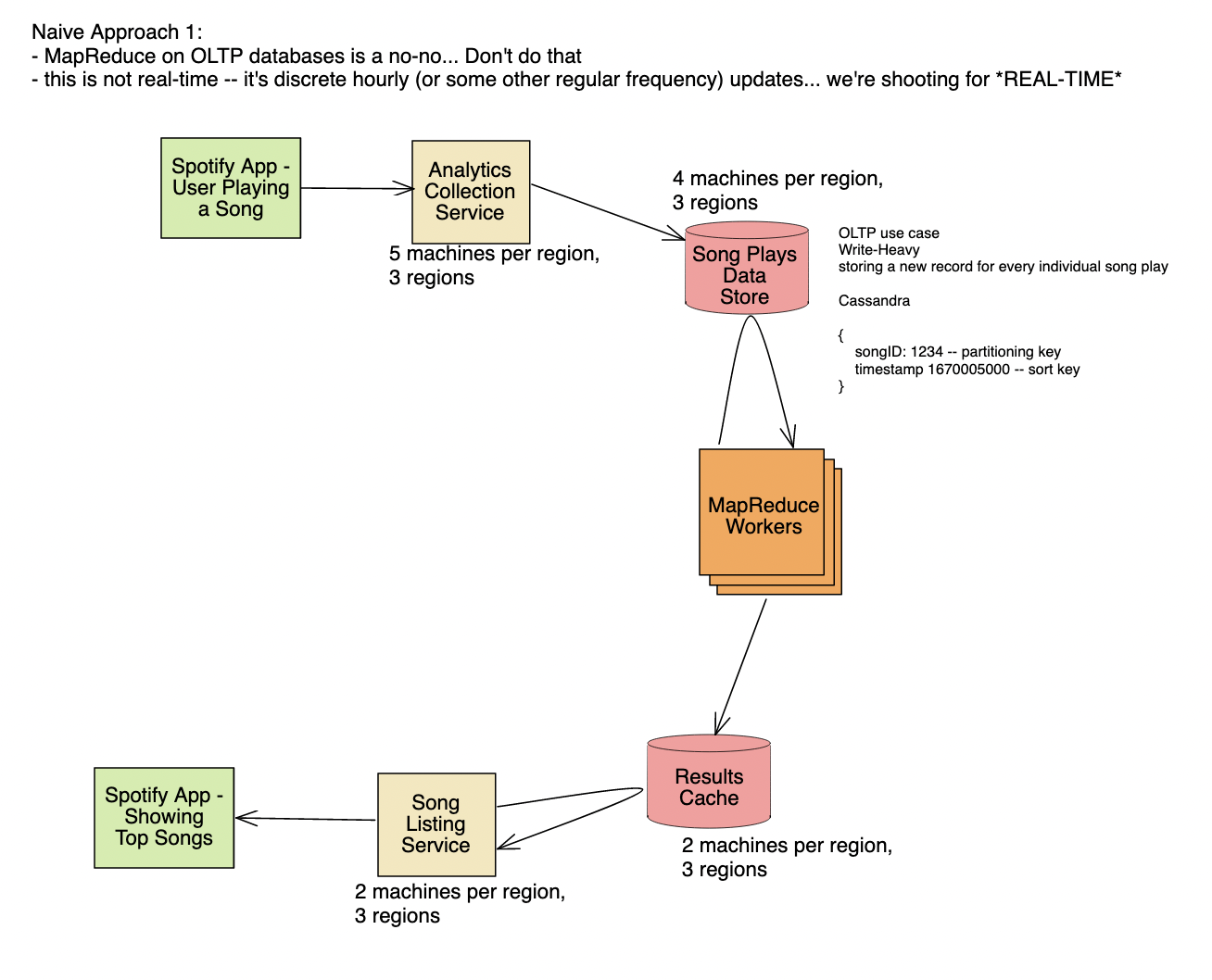

Most engineers think they can just throw a SELECT * FROM sales ORDER BY count DESC LIMIT 10 at a database and call it a day. That works for a blog with fifty readers. It fails miserably when you’re dealing with millions of events per second. If you try that at scale, your database will melt, your latency will spike to ten seconds, and your users will bail. This is the core challenge of top k system design. It’s the art of finding the most frequent items in a massive, never-ending stream of data without actually counting every single thing perfectly.

📖 Related: Getting the Most Out of the Apple Store Park City Center Lancaster PA

The Brutal Reality of Scale

Scale changes the math. Honestly, when you’re handling data at the level of YouTube or TikTok, "exact" is a luxury you can’t afford.

Imagine you’re building a system to track the top 100 most-watched videos globally in the last hour. You have billions of logs. If you try to keep an exact count for every single video ID in a hash map, you’ll run out of memory before you even finish the first minute of the hour. Most of those IDs are "long-tail" items—videos watched by exactly one person. They’re noise. They take up space and give you nothing back.

In a real top k system design, we accept a trade-off. We trade a tiny bit of accuracy for massive gains in speed and memory efficiency. We use probabilistic data structures. It sounds fancy, but it basically means we're okay being 99.9% right if it means the system doesn't crash.

Why Heavy Hitters Matter

In the industry, we call these top items "Heavy Hitters."

The Pareto Principle usually kicks in here: 80% of your traffic comes from 20% of your items. Actually, in social media, it’s often more like 95/5. Because of this skew, we don't need to track the bottom 80% with any precision. We just need to make sure the big players don't slip through the cracks.

📖 Related: Canon MG2500 Driver Mac: What Most People Get Wrong

The Count-Min Sketch Approach

One of the most famous tools for this is the Count-Min Sketch. Think of it like a 2D array of counters. When an event comes in, you hash the item ID several times and increment the corresponding buckets.

When you want to know how many times a video was watched, you check those buckets and take the minimum value. Why the minimum? Because hashing causes collisions. Multiple items might hit the same bucket, making the count higher than it actually is. By taking the minimum, you reduce that overestimation. It’s a clever way to keep track of millions of items using a tiny, fixed amount of memory.

But wait. Count-Min Sketch tells you the frequency of an item if you already know the ID. It doesn't magically tell you which IDs are the top ones. For that, you need something more.

Misconceptions About Real-Time Processing

People often confuse "real-time" with "instantaneous total accuracy."

In a high-load top k system design, you’re usually looking at a Lambda or Kappa architecture. You have a fast path and a slow path.

The fast path uses something like Apache Flink or Spark Streaming. It processes data in "windows"—say, 10-second chunks. It gives you a "good enough" list of trending items. Then, you have the batch layer (the slow path) that runs every hour or day, crunching the raw logs in S3 or BigQuery to give the definitive, audited numbers.

💡 You might also like: Is There Any Wind on the Moon? What Most People Get Wrong About Lunar Physics

If you’re looking at a "Trending" sidebar, you're looking at the fast path. If you’re looking at a creator’s monthly payout dashboard, you’re looking at the slow path. Never mix the two.

The Algorithm That Actually Wins: HeavyKeeper

If you really want to impress in a system design interview or at a high-level architecture meeting, stop talking about Count-Min Sketch and start talking about HeavyKeeper.

A few years ago, researchers realized that older algorithms like Lossy Counting or Space Saving had flaws. They were either too slow or too memory-intensive. HeavyKeeper uses a "count-with-exponential-decay" strategy.

Basically, when a new item comes in and hits a bucket that’s already occupied by a different item, it doesn't just overwrite it. It "attacks" the existing item. If the existing item is a "heavy hitter," it survives the attack. If it’s a rare item, its count decays until it’s eventually evicted. This is incredibly efficient at keeping the "heavy" items in the top slots while aggressively purging the noise.

Putting It All Together: The Architecture

A production-grade top k system design usually looks something like this:

- API Gateway: Receives the event (e.g., a "like" or a "view").

- Messaging Queue (Kafka): Buffers the events. This is non-negotiable. You need a buffer so your processing engine doesn't choke during a spike.

- Stream Processor (Flink): This is where the magic happens. It aggregates counts in sliding windows. It might use a Top-K heap to keep track of the current winners.

- Storage (Redis): The stream processor writes the current Top K list to a Redis Sorted Set (ZSET). Redis is perfect here because it handles ranking natively.

- Dashboard/API: The frontend queries Redis, which is lightning fast.

It sounds linear. It isn't. You have to handle partition keys in Kafka carefully. If you partition by ItemID, one "viral" video could overwhelm a single worker thread—this is the "Hot Partition" problem. You might need to add a "pre-aggregator" layer to combine counts before they even hit the main processing logic.

Dealing with Time

What does "Top" even mean? Top of all time? Top today? Top in the last 5 minutes?

Most modern systems use Sliding Windows. If you use a Tumbling Window (e.g., 1:00 to 1:10, then 1:10 to 1:20), you get weird "cliff" effects at the boundaries. A sliding window (e.g., "the last 10 minutes, updated every 10 seconds") is much smoother, but it's significantly harder to compute. You end up having to store sub-aggregates and merge them on the fly.

Real-World Nuance: The "Filter" Problem

Sometimes the Top K isn't just about frequency. It's about frequency within a category.

"Top 10 Action Movies" or "Trending in Tokyo."

If you try to maintain a separate Top K heap for every possible filter combination, your memory usage explodes. This is where you have to get smart with your data partitioning. Usually, you’ll have a global stream and then "fan-out" to specific processors for the most popular categories, while using a more generic, query-time aggregation for the niche filters.

Actionable Steps for Implementation

If you are tasked with building a Top K system tomorrow, don't start by writing custom C++ code for HeavyKeeper.

- Audit your scale first. If you’re doing less than 5,000 events per second, a simple Redis Sorted Set is probably all you need. Don't over-engineer.

- Define your "K". Is it Top 10? Top 1000? The larger the K, the more memory you need. Usually, Top 100 is the sweet spot for user-facing features.

- Choose your window. Most businesses think they want "all-time" stats, but those are boring. Users want "now." Start with a 1-hour sliding window.

- Handle Hot Keys. If you’re using Kafka, implement a two-stage aggregation. Aggregate locally in your producer or a small Flink task before sending the data to the global ranker.

- Embrace Approximation. Tell your product manager that the real-time list might be 1% off from the daily audit. If they can’t accept that, they don't understand the physics of distributed systems.

The best systems are the ones that fail gracefully. In top k system design, that means even if your stream processor lags, your Redis cache still holds the "last known good" ranking, so the user never sees an empty list. Accuracy is great, but availability is king.

Next Steps for Design Mastery

Focus on the bottlenecks. Look at your current data pipeline and identify where the "Hot Partition" would happen if one item suddenly got 100x the normal traffic. Solve for that specific failure case using a two-stage merge-sort approach or a distributed frequency sketch. Once you can handle a viral event without manual intervention, you've moved past basic tutorials and into actual senior-level architecture.