You've probably seen those eerie, hyper-realistic videos floating around X and TikTok lately—the ones where a static childhood photo suddenly breathes, blinks, or turns into a cinematic sweep of a rainy street. Most of that magic is coming from Luma Labs. People are calling it the can you see what i see dream machine because, honestly, it feels like peering directly into someone’s subconscious. It isn’t just another AI gimmick. We are past the era of "Will Smith eating spaghetti" nightmares. We’ve entered the era of high-fidelity video generation that actually understands physics, lighting, and—most importantly—intent.

It’s weird.

It's also incredibly powerful. When Luma AI dropped the Dream Machine, they didn't just release a tool; they broke the dam on high-quality video generation for the average person. You don’t need a massive GPU rig or a PhD in prompt engineering to make something that looks like it belongs in a Netflix documentary. You just need a prompt and a little bit of patience.

What is the Can You See What I See Dream Machine anyway?

At its core, the Dream Machine is a highly advanced transformer model trained directly on video. Unlike older systems that tried to stitch together individual images (which is why they looked so jittery), this model predicts how pixels should move in a 3D space over time. When users talk about the can you see what i see dream machine experience, they’re usually referring to the "Image-to-Video" feature. This is where the real emotional weight lies. You take a photo of a lost loved one, or a drawing from your childhood, and the AI "sees" the depth and motion that should be there.

📖 Related: The World Wide Web Explained: Why Most People Still Mix It Up With The Internet

It hallucinates, sure. But it hallucinates with a terrifying level of competence.

I’ve spent dozens of hours messing with the settings. One thing becomes clear immediately: the model is remarkably good at understanding cinematic language. If you tell it to do a "slow dolly zoom" or a "low-angle pan," it knows exactly what those camera movements imply for the perspective of the objects in the frame. It’s not just moving a 2D layer; it’s simulating a world.

The Physics of a Dream

One of the biggest hurdles for AI video has always been consistency. If a character walks behind a tree, do they come out the other side looking like the same person? Or do they morph into a puddle of digital soup? Luma’s engine handles "occlusion"—that’s the fancy word for things getting blocked—better than almost anything else currently available to the public.

It isn't perfect, though.

If you try to generate complex human interactions, like two people shaking hands or someone tying their shoelaces, the "dream" starts to fall apart. Fingers merge. Gravity becomes a suggestion. Yet, for environmental storytelling and atmospheric shots, it’s basically unmatched.

Why the Internet is Using it for Nostalgia

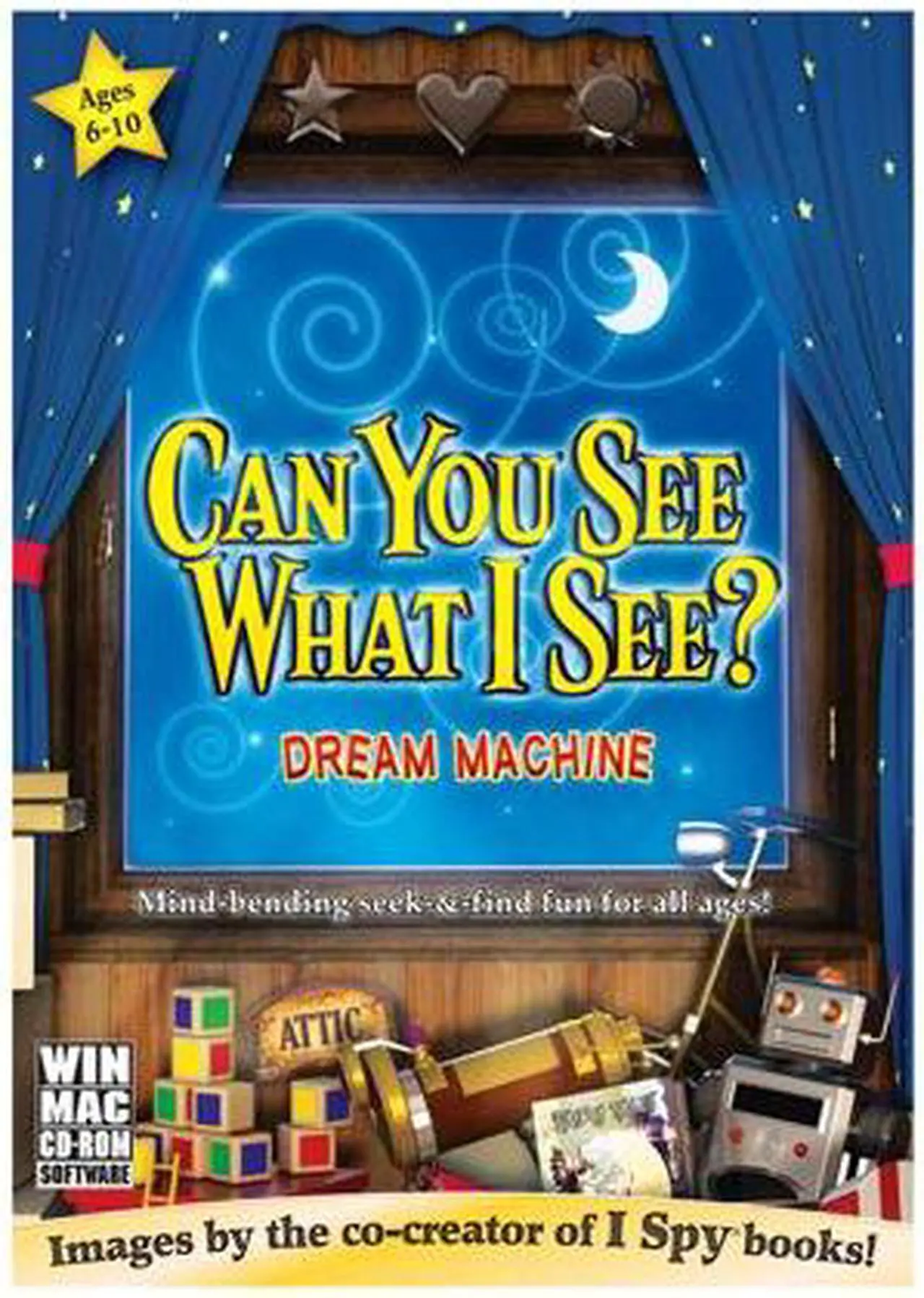

There is a specific trend where people are taking photos from the "Can You See What I See?" books by Walter Wick—the successor to the I Spy series—and running them through the Dream Machine. These books were already surreal. They featured intricate dioramas, toy solders, and dusty attics filled with trinkets.

Seeing these static memories come to life is deeply nostalgic. It’s a literal interpretation of the can you see what i see dream machine concept. The AI takes a crowded shelf of 1990s toys and makes the clock on the wall tick. It makes the dust motes dance in the light of a miniature window.

This use case highlights a shift in how we interact with media. We aren't just consumers anymore; we are archivists of our own memories, using AI to "upscale" our past.

The Technical Reality vs. The Hype

Let's get real for a second. While the marketing makes it look like a one-click miracle, there is a learning curve.

Luma gives you 120 frames (about 5 seconds) of footage per generation. If you want a longer story, you have to use the "Extend" feature. This is where your skills as a director come into play. You have to guide the AI to continue the motion without losing the thread of the narrative. It’s a collaborative process. You are the director; the Dream Machine is a very talented, slightly chaotic cinematographer.

- Prompting matters more than you think. If you just type "dog running," you get something generic. If you type "Golden Retriever sprinting through a sun-drenched meadow, 4k, cinematic motion blur, 24fps," the results are night and day.

- Image quality is the ceiling. If you upload a blurry, low-res screenshot, the video will be blurry and low-res. Use high-quality JPGs or PNGs for the best results.

- The "Enhance Prompt" toggle. Luma has a feature that automatically rewrites your prompt to be more descriptive. Use it. It understands the model's internal "language" better than you do.

Comparing the Giants: Luma vs. Sora vs. Kling

We can't talk about the can you see what i see dream machine without mentioning the competition. OpenAI has Sora, which looks mind-blowing but remains locked away for most people. Then there’s Kling, the Chinese powerhouse that can generate videos up to two minutes long.

Why is Luma winning the mindshare right now?

Accessibility.

You can sign up with a Google account and start making videos in thirty seconds. There's no waiting list of six months. There's no "pro-only" wall for basic experimentation. Luma understood that the first company to put high-end video AI into the hands of meme-makers and casual creators would win the culture war.

Kling is impressive, but it’s harder to access outside of China. Sora is a ghost. Luma is here, it’s fast, and it’s mostly free to try.

Navigating the Ethical Gray Zones

We have to talk about the elephant in the room. Deepfakes. Misinformation. The "uncanny valley."

When you give people a tool that can turn any image into a realistic video, things get complicated fast. Luma has filters in place. You can’t (easily) generate videos of celebrities or public figures doing compromising things. But the technology is moving faster than the guardrails.

There's also the artist's perspective. I’ve talked to several concept artists who feel a mix of awe and dread. On one hand, the can you see what i see dream machine can help them storyboard a film in hours rather than weeks. On the other hand, it’s trained on a massive dataset of existing videos and images. The question of "who owns the style?" is still being fought in courts.

Despite the controversy, the creative genie is out of the bottle. You can't un-invent the ability to animate the world with a text prompt.

Tips for Better Generations

If you’re actually going to sit down and use this thing, don't just waste your credits on random guesses. Here is a workflow that actually works.

- Start with an Image. Text-to-video is "hard mode." Image-to-video gives the AI a grounding in reality. It knows what the colors should be and where the objects are located.

- Describe the Motion, Not the Subject. If you’ve uploaded a photo of a car, the AI already knows it’s a car. Tell it how to move. "The car accelerates quickly away from the camera, kicking up a cloud of red desert dust."

- Use Negative Prompting (if available). While Luma’s interface is simple, some third-party wrappers allow you to specify what you don't want. "No morphing, no extra limbs, no blurry faces."

- The 3-Second Rule. The first three seconds are usually the most stable. If you’re making a montage, plan to cut your clips short.

What’s Next for AI Video?

We are looking at a future where "content" becomes personalized in real-time. Imagine a video game where the cutscenes aren't pre-rendered but are generated on the fly based on your specific character's actions. Or a personalized "Can You See What I See?" book where the hidden objects change every time you open it.

🔗 Read more: Why Transmedium UFOs Coming Out of the Ocean Are the Real Story

The can you see what i see dream machine is just the version 1.0 of this reality.

In a year, we will likely have 4K resolution at 60 frames per second with perfect temporal consistency. We will be able to edit specific parts of a video—like changing the color of a character's shirt—without regenerating the whole scene.

It’s a bit scary. It’s a lot of fun.

Actionable Steps for Creators

If you want to master this tool before it becomes completely saturated, here is what you should do today:

- Audit your photo library. Look for high-contrast images with clear subjects. These work best for the Dream Machine's initial "hook."

- Practice "Prompt Stacking." Take one video you like, and try to recreate it five times with slight variations in the text. This teaches you exactly how the model reacts to specific words.

- Join the Discord. Luma has a massive community. People share their prompts and their failures there. It is the fastest way to see what the "meta" is for AI video.

- Focus on Story, Not Tech. At the end of the day, a 5-second clip of a cool-looking robot is just a 5-second clip. The creators who are gaining traction are the ones using these clips to tell actual stories, even if they are only 30 seconds long.

The barrier to entry for filmmaking just hit the floor. The only thing left is your ability to imagine something worth seeing. Go to the Luma Labs website, sign in, and start experimenting with your own imagery. The first few tries might look like a fever dream, but eventually, you'll hit that one generation that looks like pure cinema. That's the moment you'll understand why this is changing everything.