You’ve spent weeks designing the perfect layout. You wrote ten blog posts that are, honestly, pretty great. You hit publish. Then you wait. And wait. A week later, you type site:yourdomain.com into the search bar and see... nothing. It’s frustrating. Most people think that once a site is live, Google just knows. But the "Google index my website" struggle is real, and it’s usually because of a few technical bottlenecks that have nothing to do with your content quality and everything to do with how Google’s spiders crawl the web.

Google doesn't owe you an index entry. Seriously.

✨ Don't miss: How Do You Fit a Whole Picture on Instagram Without Getting It Cut Off

The web is massive. We're talking hundreds of billions of pages. To save on "crawl budget," Googlebot—the automated software that explores the web—is picky. It won't index a page just because it exists. It has to find it, understand it, and deem it worthy of storage in its massive database. If your site is stuck in the "Discovered - currently not indexed" limbo, you aren't alone.

The Brutal Truth About Why Google Won't Index Your Website

Most advice tells you to just wait. That’s bad advice. If your site isn't showing up after 48 hours, something might be blocking the door.

Check your robots.txt file immediately. You'd be surprised how many developers accidentally leave a Disallow: / command in there from the staging phase. It’s a tiny line of code that basically tells Google, "Go away, don't look at anything here." You can find this by going to yourdomain.com/robots.txt. If you see that slash after "Disallow," you've found your culprit.

Another common headache is the "noindex" tag. This is a snippet of HTML in the <head> section of your pages. If you're using WordPress, there’s a literal checkbox in the Settings > Reading menu that says "Discourage search engines from indexing this site." If that’s checked, Google will respect your wish and stay away. It’s like putting a "No Trespassing" sign on your front lawn and then wondering why the mailman didn't deliver the package.

Understanding the Crawl Budget

Google isn't an infinite resource. It allocates a certain amount of time to "crawl" each site. If your site is slow—I mean really sluggish—Googlebot might give up before it even reaches your best content. Large images, unoptimized JavaScript, and cheap hosting can all eat into this budget. Think of it like a 30-second shopping spree; if you spend 20 seconds trying to open the store door, you aren't going to get much in your cart.

Gary Illyes from Google has mentioned multiple times on the Search Off the Record podcast that the quality of the site plays a huge role in how often Googlebot visits. If your site is thin or lacks original value, Google just won't prioritize it. It’s a harsh reality.

The Fast Track: How to Force Google to Index My Website

If you want speed, you have to use Google Search Console (GSC). It’s free. It’s essential. If you haven't verified your site in GSC yet, do it now.

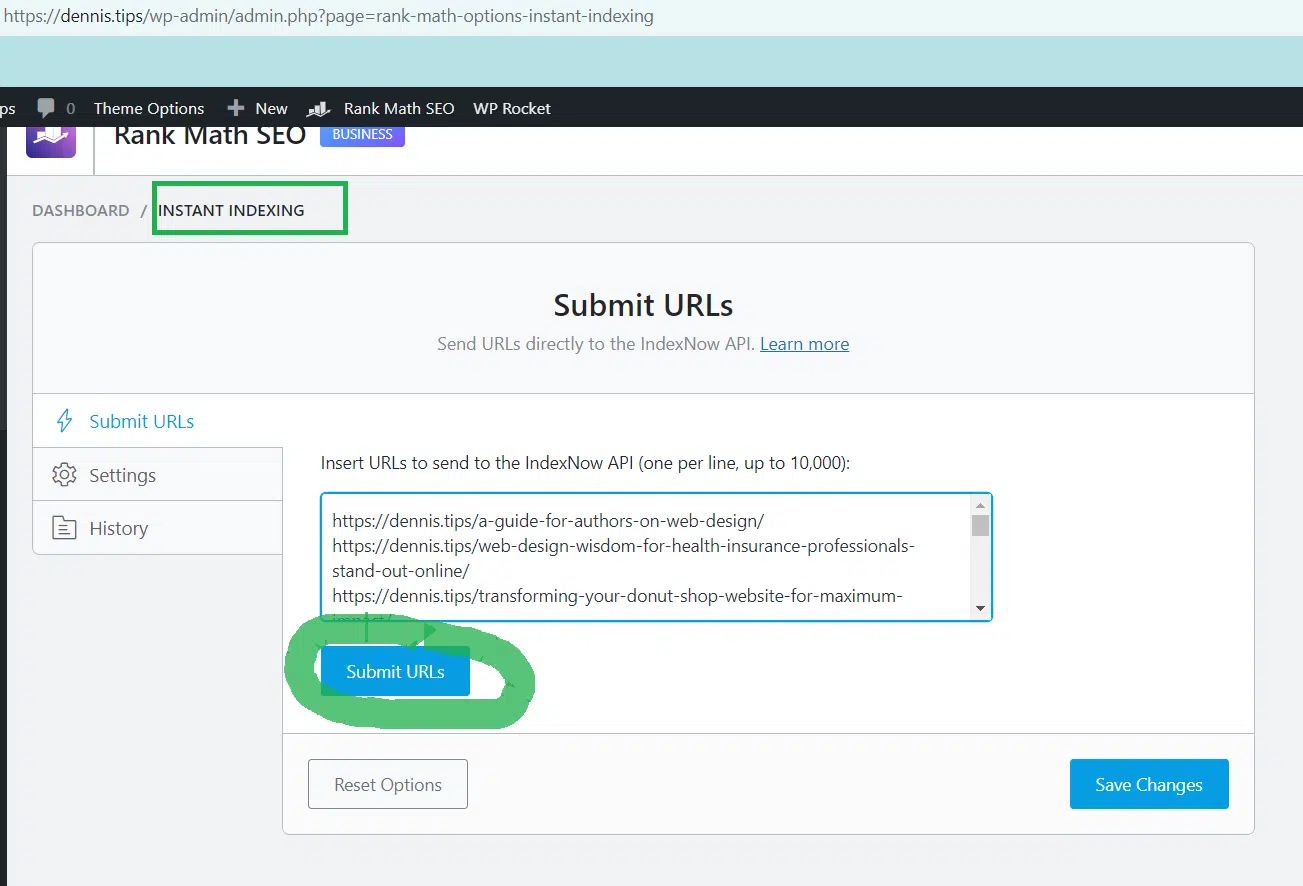

Once you’re in, the URL Inspection Tool is your best friend. Paste your URL into the top bar. If it says "URL is not on Google," click that "Request Indexing" button. This doesn't guarantee an immediate result, but it pushes your URL into a priority queue. It’s the digital equivalent of tapping Google on the shoulder and saying, "Hey, look at this."

Sitemaps Aren't Optional

An XML sitemap is essentially a map for Googlebot. It lists every page you want indexed. Without one, Google has to rely on finding links to your pages, which is a slow process if your internal linking is a mess.

- Generate a sitemap (most SEO plugins do this automatically).

- Submit the URL (usually

sitemap.xml) in the Sitemaps section of GSC. - Check for errors. If Google can't read your sitemap, it won't use it.

Getting into Google Discover: The Real Gold Mine

Discover is different from Search. In Search, people look for you. In Discover, Google pushes your content to people based on their interests. To get there, your site must first be indexed properly. But there’s a catch. Discover is highly visual and highly "fresh."

If you want your content to show up on the home screens of millions of Android and iPhone users, you need high-resolution images. We are talking at least 1200 pixels wide. Use the max-image-preview:large setting in your robots meta tag. Without this, your chances of appearing in Discover drop to almost zero.

Content for Discover needs to be timely. It’s not just about "google index my website" tactics; it’s about creating something that triggers an emotional response or answers a trending question. Think "Why X is happening" rather than "The history of X."

Common Myths That Waste Your Time

Don't buy 5,000 "indexing backlinks" from a shady site on Fiverr. It doesn't work. In fact, it might get you penalized. Google’s algorithms are smart enough to recognize a "link farm" from a mile away.

📖 Related: Pic of Moon Today: Why Your Night Sky Photos Look Blurry and How to Fix It

Social media links don't directly lead to indexing either. While a viral tweet might bring traffic, the links themselves are usually "nofollow," meaning they don't pass that SEO authority (or "link juice") that helps with indexing. However, the traffic from those links can signal to Google that your page is important, which might trigger a crawl.

The Role of Internal Linking

If you have a page that is indexed and ranking well, link from that page to your new, unindexed page. This is the most natural way to get Googlebot to find new content. It follows the path of the link. If your new page is "orphaned"—meaning no other page on your site links to it—Google might never find it, even if you submit a sitemap.

Technical Gremlins: Canonical Tags and Redirects

Sometimes, Google does index your site, but it chooses the wrong version. This happens with "canonicalization." If you have two versions of a page (like a mobile version and a desktop version, or a version with www and one without), Google will pick one as the "canonical" version. If it picks the wrong one, your preferred page won't show up.

Always define your canonical URL in the code. It tells Google, "Even if you find this content elsewhere, this specific URL is the master version."

Also, watch out for redirect chains. If a user has to jump through three different 301 redirects to get to your page, Googlebot might just stop. It likes direct paths. Keep your redirects simple: A goes to B. That's it.

Indexing is Only the Beginning

Getting indexed is just getting your foot in the door. It doesn't mean you'll rank on page one. To rank, you need relevance and authority. But you can't rank if you aren't in the system.

Check your "Page Indexing" report in Search Console weekly. It will tell you exactly why certain pages are being excluded. Maybe they have "Duplicate content without user-selected canonical" errors. Maybe they are "404 Not Found." These reports are the closest thing you have to a direct conversation with Google’s technical team.

If you’ve done everything right—submitted the sitemap, requested indexing, fixed the robots.txt—and you’re still not seeing results after two weeks, look at your content. Is it unique? Does it provide more value than what’s already on page one? Sometimes "Google won't index my website" is actually Google saying "This content isn't adding anything new to the web."

The 2026 Indexing Checklist

- Verify GSC: Ensure your site is connected to Google Search Console.

- Check Robots.txt: Make sure you aren't accidentally blocking crawlers.

- Audit for Noindex Tags: Look through your CMS settings for any "discourage search engines" toggles.

- Submit XML Sitemap: Give Google the direct path to your pages.

- Use the Inspection Tool: Manually request indexing for your most important pages.

- Fix Core Web Vitals: A fast site gets crawled more often.

- Build Internal Links: Connect your new pages to your old, successful ones.

- Wait 48-72 Hours: Give the system time to process the request.

Stop obsessing over the "Request Indexing" button and start looking at your site’s overall health. A healthy, fast, well-linked site will never have to beg Google for an index entry. It will happen naturally because Google wants to show its users the best content available. If you make your site the best, the indexing will follow.

📖 Related: Pt: Why the Chemical Symbol of Platinum is More Than Just Letters

Check your Search Console today. Look specifically at the "Excluded" section of your Indexing report. Identify the top three reasons for exclusion—usually "Crawl anomaly" or "Redirect error"—and fix those first. Once those technical barriers are gone, use the URL Inspection tool to resubmit those fixed pages. This proactive approach is the only way to ensure your site stays visible in an increasingly crowded digital landscape.