Integrating AI into Java apps used to feel like pulling teeth. You had to juggle disparate APIs, manage state manually, and pray the context window didn't explode. Then came the Spring AI MCP server implementation, and honestly, the game changed overnight. It’s not just another library update. It is a fundamental shift in how we connect Large Language Models (LLMs) to the actual data sitting in our enterprise systems.

Most people think of Spring AI as just a wrapper for OpenAI or Anthropic. They're wrong. With the Model Context Protocol (MCP), Spring is basically giving your AI a pair of hands.

The Problem with LLM Isolation

LLMs are brilliant but trapped. They’re like a genius in a room with no internet and a bookshelf that hasn't been updated since 2023. You can talk to them, but they can't "see" your SQL database or your Jira tickets unless you manually feed that data in. This is where the Spring AI MCP server comes into play. It acts as the standardized bridge.

Before MCP, if you wanted your AI to check a customer's recent orders, you had to write custom "tools" or "functions." You’d define a JSON schema, handle the plumbing, and hope the LLM understood how to call it. If you switched from OpenAI to Claude, you often had to rewrite half that logic. It was a mess.

MCP fixes this by standardizing the connection. Think of it like USB-C for AI models. It doesn't matter who made the device; if it has the port, it just works.

How the Spring AI MCP Server Actually Works

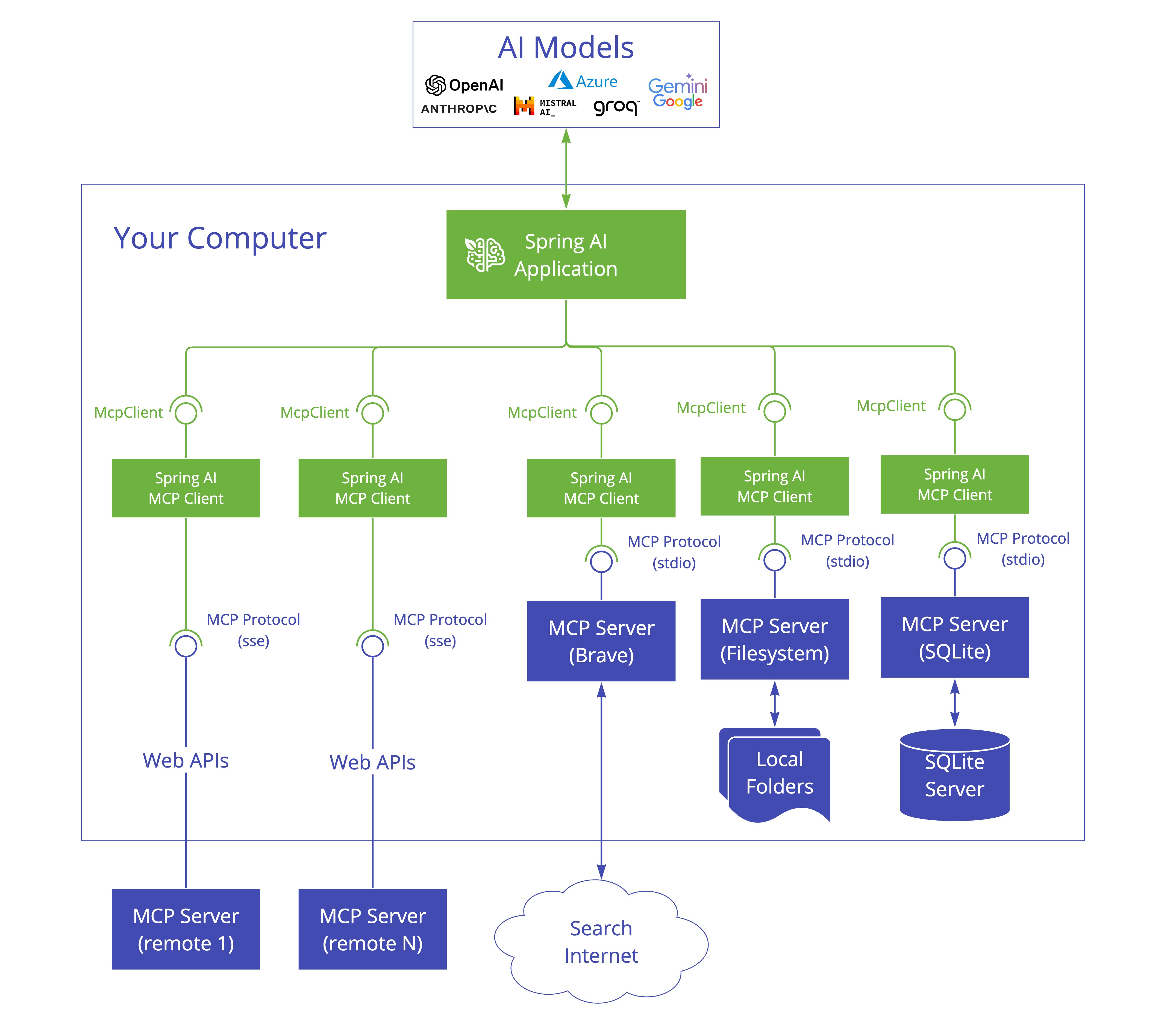

The architecture is surprisingly elegant. In the Spring ecosystem, you can now run an MCP server that exposes specific "resources," "prompts," and "tools."

👉 See also: Wallpaper for PC: Why Your Desktop Setup Is Probably Slowing You Down

The Spring AI MCP server architecture involves a few moving parts. First, you have the host—this is usually your AI orchestration layer or a desktop client like Claude Desktop. Then you have the server. Using Spring Boot, you can wrap your existing services into an MCP server with incredibly little boilerplate.

Let's say you have a legacy database.

Instead of writing a complex RAG (Retrieval-Augmented Generation) pipeline, you can create a Spring Boot application that implements the MCP server interface. You expose a tool called query_database. When the user asks the AI, "How many widgets did we sell in Ohio?", the AI sees the tool available via the MCP server, calls it, and gets the real-time answer.

The Magic of Tool Calling

Spring AI leverages the FunctionCallback interface to make this happen. But with the MCP integration, it goes a step further. You aren't just hardcoding tools into a single app. You are creating a modular ecosystem.

You can have one MCP server for your CRM, another for your logs, and another for your cloud infrastructure. Your Spring AI-powered "agent" can then connect to all of them simultaneously. It's decentralized intelligence.

Why Java Developers Should Care Right Now

Java is the backbone of the enterprise. While Python gets all the hype for AI research, Java is where the actual business logic lives. The Spring AI MCP server brings AI to the data, rather than trying to move massive enterprise datasets to the AI.

It's about security, too.

Running a local MCP server means your sensitive data doesn't necessarily have to live in a vector database managed by a third party. You control the server. You control what tools are exposed. You control the authentication.

Real-World Example: The Support Ticket Bot

Imagine a support engineer named Dave. Dave spends four hours a day looking up logs in Splunk and matching them to tickets in Zendesk.

By building a Spring AI MCP server that connects to both Splunk and Zendesk, Dave’s team can create an internal AI assistant. This assistant doesn't just guess. It uses the MCP tools to "fetch_logs" and "get_ticket_history."

📖 Related: Getting the Most Out of the Apple Store Bay Street Emeryville

The AI says: "I found a NullPointerException in the logs at 2:00 PM that matches the user's report."

That isn't a hallucination. It's a verified fact retrieved via the server.

Setting Up Your First Spring AI MCP Server

You don't need a PhD in Neural Networks to get this running. If you can write a @RestController, you can build an MCP server.

- Add the Dependencies: You'll need the

spring-ai-mcpstarter. It’s still evolving, so keep an eye on the experimental milestones in the Spring repository. - Define Your Tools: Use the

@Toolannotation (or the functional equivalent) to tell the server what it can actually do. - Configure the Transport: MCP supports different transport layers. Stdio is common for local scripts, but for Spring apps, you're often looking at HTTP/SSE (Server-Sent Events).

- Register with the Client: Point your LLM client (like the Spring AI

ChatClient) at your MCP server's URL.

It's honestly that simple. The heavy lifting of JSON-RPC communication is handled under the hood.

The Nuance: It’s Not All Sunshine

We have to be realistic. MCP is a young protocol. While Anthropic pushed it out into the world with a lot of momentum, the ecosystem is still catching up.

One major hurdle is state management. If your MCP server is stateless, the LLM has to provide all the context every time. If it's stateful, you've got to manage sessions across what might be a distributed system.

Then there's the "Small Model" problem.

Cheaper, smaller models often struggle to follow the complex instructions required for tool calling via MCP. You might find that while GPT-4o or Claude 3.5 Sonnet handles your Spring AI MCP server perfectly, a tiny 7B parameter model starts tripping over its own feet.

Strategic Moves for 2026

If you're an architect or a lead dev, you need to start thinking in terms of "MCP-first" integration. Stop building one-off integrations. Every internal API you write from now on should probably have an MCP server wrapper.

This isn't just about chat. It's about autonomous agents that can actually do work.

💡 You might also like: iPad Air 13 inch M3: Is the Pro version actually worth the extra money now?

The Spring AI MCP server is the key to moving past the "chatbot" phase and into the "agentic" phase of software development. We are moving toward a world where software doesn't just wait for a human to click a button. It monitors its own environment and uses MCP tools to fix problems before the human even wakes up.

Actionable Next Steps

- Audit Your Data Sources: Identify the three most common data silos your team accesses manually. These are your first candidates for an MCP server.

- Prototype with Stdio: Start by building a simple command-line MCP server using Spring AI to understand the handshake process.

- Secure the Perimeter: Before deploying, implement robust OAuth2 protection on your MCP endpoints. An AI with tool access is a powerful—and dangerous—user.

- Monitor Token Usage: Remember that every time an LLM "inspects" your MCP server's tools, it consumes tokens. Optimize your tool descriptions to be concise but descriptive.

The era of the isolated LLM is over. By leveraging the Spring AI MCP server, you’re giving your applications a way to communicate, act, and reason within the context of your specific business logic. It’s time to stop talking to the AI and start letting the AI work for you.